Compile time programming is cool

You might have never used it to its potential

First of all, to all those sweet JS only users … please bug off. I can’t help you, god can’t either. I am not sure why you even clicked on this article given that your best contender is a fully ignorable linter which generates back to shitty language ~_~

Now that’s out of the way, hello there rest of the sane people using relatively sane languages. You must have heard about “metaprogramming”, a umbrella term that includes whole lot of things, but I like a subset of it which relates to, what I like to call “run thingy-ma-jig fast ” programming. There are other things like reflections that is technically metaprogramming but that's not the focus for this article.

This article has two parts first being the reason I like compile time programming and why you should consider it, second some of the implementable topics in different languages that might come to use when you are tackling problem. Be free to skip the implementations if you don't feel like reading it.

for those wondering, imagine how 2’s compliment and negative numbers relate

My first introduction to such a thing was in C++, at first when I accidentally pressed “go to definition (gd)” my eyes burned to crisp seeing this foreign language disguised as C++. My first reaction, “what the devil is this?”, my 14-year-old ass, ran around trying to understand it. Guess what? I couldn’t, so I gave up and moved on just remembering the name which haunted me “templates”.

Past a few years later, young Sid was exploring his way around programming making games, apps, websites, renderers, GPU compute projects using various languages and tools. He became familiar with a little bit of template and macro systems in different languages but never explored them in depth because he never had a use for them.

My thoughts of writing this article were that I never needed any in depth compile time programming stuff for first 6 straight years in my journey, perhaps you might have dismissed them too?

The “ONE” question

Sometimes a lot of topics can never make it into our peanut sized brain because of various reasons, but then comes that piece of shit question that sends you hurdling down the butt hole I mean rabbit hole. For me this was, “hey, I already know the compute nodes at compile time can I "run thingy-ma-jig fast” by generating the compute graph at compile time? ”.

Above project is too ambitious, I will write about it if it doesn’t go to dustbin and yes I had to do it in C++ there was no other sane way : (

What are my favorite languages for compile time stuff?

The answer was yes off-course but that's my use case you might want something that you would find useful.

Why bother learning?

This isn't a programming principles or advice kind of article, but I would like to mention that "learn your f-in tool". All programming languages are tools, your computer is a tool, if you can make things fast or understandable by minimum efforts why not take your time to learn it. There is an argument to be made about excessive learning of a tool but learning new concepts that apply in many cases (ie. many languages) like our topic can be pretty good hammer to bonk on a problem.

Compute for me please!

Zig is absolutely peak of compile time evaluation design in my opinion, while other languages like rust and C++ require annotating compile time evaluable functions in zig you just plonk a comptime in front of basically anything its is done.

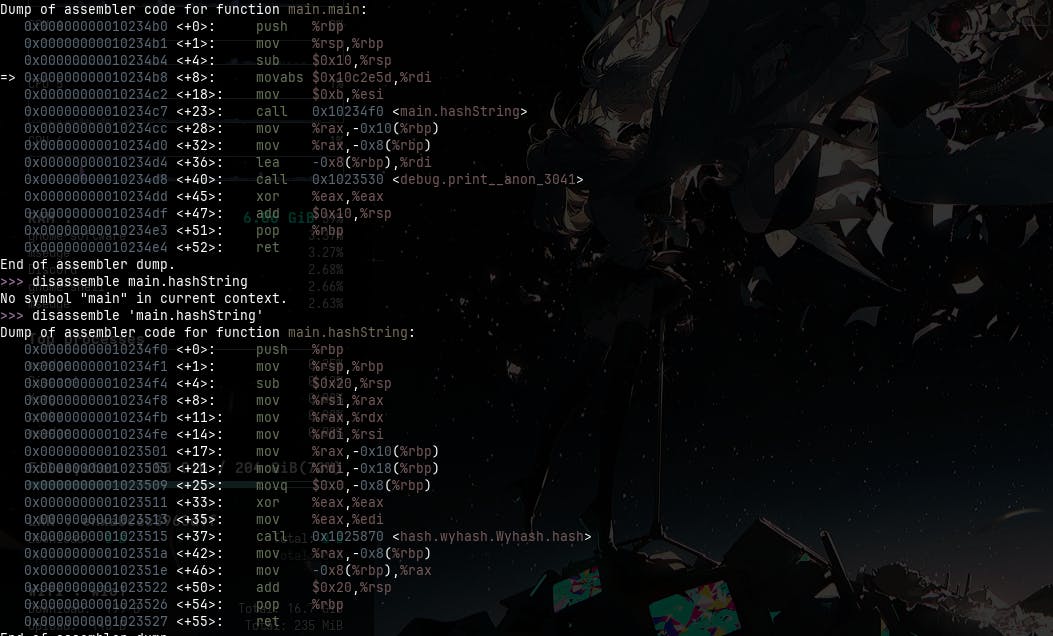

In this example we are using standard library to compute a hash string at compile time. The above code is very generic zig code which produces the below assembly (debug, not release because such a simple function will be inlined and optimized away even without comptime), there is no need to know the assembly you can just see the main function calling another function which calls std function which will take time for no reason.

How to make this faster? Just plonk a comptime in front of hashString function call

zig comptime = (•_•) ( •_•)>⌐■-■ (⌐■_■)

There it is a value being generated just at compile time which just printed to console when run, cool isn't it? I hope you think it's cool otherwise I will be sad : (

Compile time hash maps ?

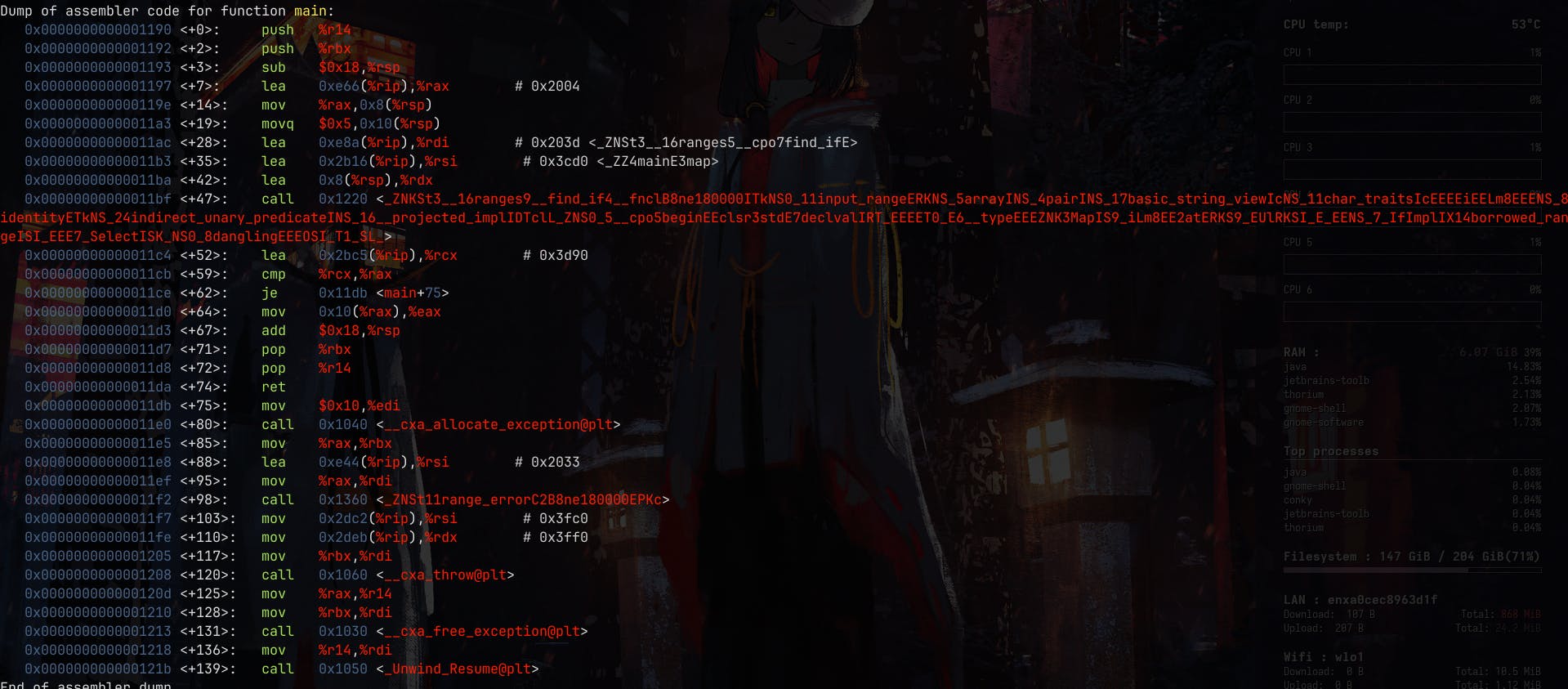

There are a lot of times where we have a one-to-one relation between two sets of thingies like Enum to strings, animal name to scientific name etc. which are known at compile times. Usual method in languages like Rust or Cpp is to use a conversion function with match or switch, or hashmap to do so. An example of such a case would be in C++

This is very simplistic use case, but such sort of things exists all over the place left as alone this will incur runtime cost for every time map.at is called while there is no need to. Below is a disassembly of fully optimized code O3'ed out of its mind and this is just the main function.

(҂◡_◡) ᕤ endure this madness.

Now there are two ways to solve this in basically any language if you need a data structure like hashmap.

Use standard library data structure if it supports compile time evaluation.

If your standard library does not have a compatible data structure

Make your own (what we will do, technically not a map but it works for most use cases up to 10000 or so key-value paris without any problems). It should be more than enough for most compile time known things use case.

Use a library which has such data structures freezed (c++) is such an example.

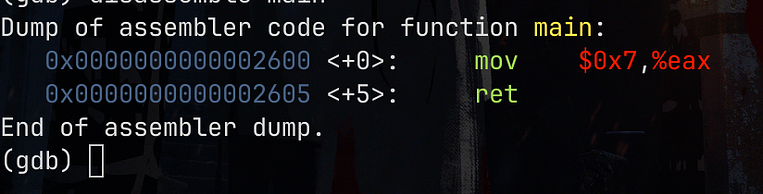

In this implementation we simply search linearly through an array no fancy pant thing because linear search is faster than binary search in constexpr world of c++ in smaller cases like in real world.

And voila, we have our single instruction returning 7 which is black's value. You can actually see other than constexpr and static(optional not really needed here but a good practice) and constructor call there are no changes to the main function's code.

Keep learning, be cool, and stay away from script kiddies they are bad influence. Make sure to learn your tools.

(̿▀̿‿ ̿▀̿ ̿) Sid out.